SEO Audit Services

Our SEO audit services identifies areas your website lacks or is failing to capture more search traffic. We will audit your website from an SEO perspective, give you a comprehensive action plan and help with implementation. Our SEO services focus on implementing the areas identified for growth.

4.7 out of 5 from 200+ reviews

SEO audit services

Mojo Dojo’s SEO audit services are at no cost and are complementary for your businesses. We have seen other agencies charge as much as $3000 for the same audits.

With our SEO packages, you would also find a comprehensive timeline of implementation of our SEO audit findings and a steady path to organic traffic and authority growth.

Many businesses think of SEO as a set and forget strategy or a once off implementation. Our SEO audit report and plan will also highlight a comprehensive schedule of changes or improvements you need to make to ensure that not only you rank but stay on top over time.

We will consider a number of factors including your company age, products or services, your market and competition, your goals from the SEO project and your SEO strategy.

Our SEO audit also is followed with a plan to improve your topical relevance including helping you create a topical map for SEO to dominate your niche and competition in the organic search.

Mojo Dojo is a Australian SEO company with 15+ years of experience in digital marketing. We are a Melbourne SEO agency that has a local presence in Adelaide, Brisbane, Sydney, Perth and other regional parts of Australia.

SEO Clients & Results

See some of our impressive clients & results

-

220% increase in revenue yoy

-

138% increase in bookings

-

6117% increase in traffic revenue

-

498.27% increase in leads

-

550% increase in organic growth

-

34% increase in organic traffic

-

198% increase in organic traffic

-

787.61% increase in revenue

Benefits of an SEO audit

There are a number of benefits of performing a yearly or bi-yearly SEO audits on your site. SEO audits can usually uncover a number of issues with Google crawl budgets or Google crawler being abnormally occupied with certain sections of your website.

Some of the main benefits of SEO audits include:

- Discover technical challenges and issues with your website that are hard to detect without an audit

- Discover wasted crawl budgets or deleted pages that had high authority

- Improve content including discovering canonical or duplicate content issues

- Improve images, videos, copy and other visual elements to also improve your speed of the application

- Optimise site hierarchy including the site structure, orphaned pages or categories and improve the overall linking of the website.

An SEO audit is the process of finding opportunities to improve a site’s organic rankings.

You can perform an SEO audit when you launch the site or regularly to impact your overall rankings.

Everyone differs in how they do an SEO audit. There is no universally acceptable way as Google’s search algorithm is not transparent.

Both your On-page SEO and your technical SEO needs to be on point for an SEO audit to be effective.

Mobile first or mobile friendliness

Google in their numerous studies about mobile devices has indicated that mobile is the future.

In fact, Google has started using Googlebot Smartphone as the indexing crawlers for most websites.

With mobile being the priority for Google, it makes sense to check your mobile friendliness and usability.

Google will complain in the event if it encounters any of the following

- Uses incompatible plugins

- Viewport not set

- Viewport not set to “device-width”

- Content wider than screen

- Text too small to read

- Clickable elements too close together

We will help you manage your mobile responsiveness and uncover problems with your templating as a part of our SEO audit services.

Robots.txt & https issues

Consider the Robots.txt as a file that you should audit often.

Robots.txt is a request to Google and other search engines to politely crawl or not crawl your site. Crawlers may or may not choose to follow these requests.

It is still recommended that you have a Robots.txt in place because major search engines do respect the file. The Robots.txt is usually found in the root directory of the website.

You can view any websites Robots.txt by going to their root URL followed by the robots.txt

In our case the file is at

Robots.txt can help maximize your crawl budget. This means that Google will get to important pages faster and often. You don’t want Google’s crawlers to be stuck on some loop on search pages or to irrelevant pages on your site.

A little error in Robots.txt can lead to a disappearance of your site in search engines. Google will remove you from their index if you block your entire site and it is not very difficult to do so.

So exercise caution when editing your robots.txt file. Remember different crawlers interpret robots.txt syntax differently. Every SEO audit should including looking at your robots.txt again.

It’s 2024 and SSL certificates are free to install.

Letsencrypt caters to almost all types of web servers. In fact, web servers like Caddy have a built-in SSL deployment. So it goes without saying that you should have an SSL certificate installed on your server.

This means your website will load from HTTPS versions.

We will help you uncover both robots or HTTPs related issues as a part of our SEO audit services.

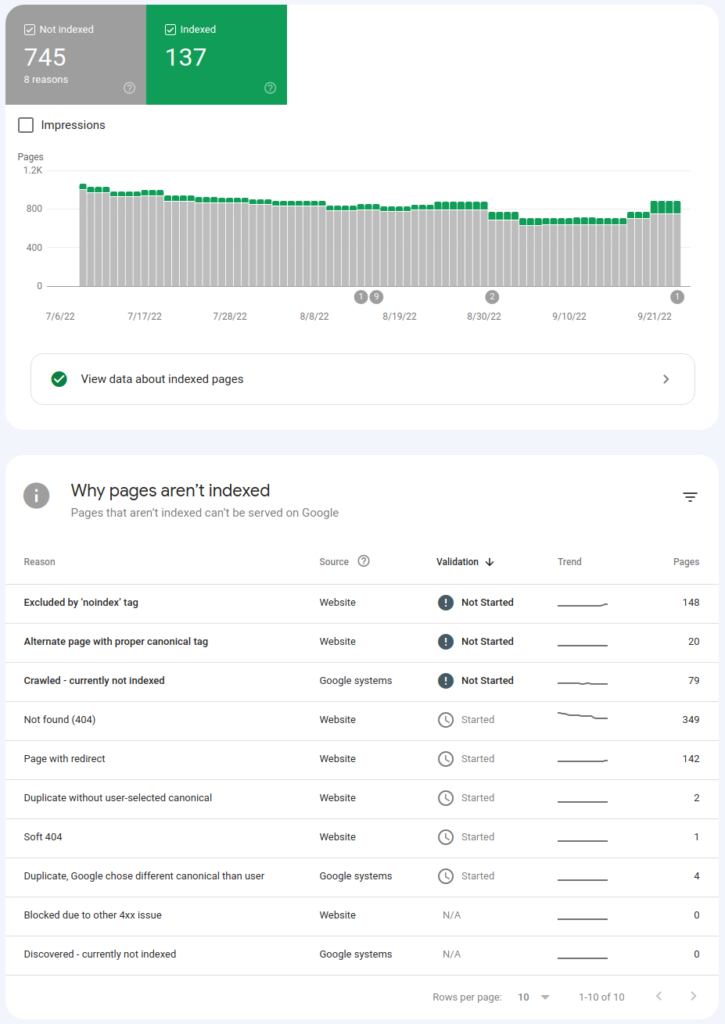

Indexability report

The indexability report is available in your search console.

Simply head to Search Console > Your domain > Pages

You can see from this report that Google tells you that your website has a number of issues namely

- Pages not found / 404 errors

- Pages with redirects

- Duplicate without user-selected canonical

- Soft 404s

- Duplicate. Google chose different canonical than user

- Crawled but currently not indexed

This is perhaps the most time consuming part of any SEO audit.

As a part of the audit, we will discover and inform you about the pros and cons of each issue and our recommendations on what Google is indicating as a part of the report.

Google indexability problems are generally indicative of other issues like server configs or CMS configs.

Other audit items

We will cover a number of other things as a part of this audit.

Audit On-page SEO

On-page SEO is key to making sure that you have optimised your site for ranking on search engines.

The basics of On-Page SEO are

- Target one page for one primary keyword

- Keyword research before building your service pages

- Focus on writing great content

- Optimise your meta titles/snippets/URLs/site architecture

- Ensure you have internal links and they are relevant

- Look at your anchor texts and be precise

- Fix duplicate content

- Check your technical SEO like robots.txt, sitemap.xml, crawlability, indexability report.

- Have a good content strategy

- Improve your text readability

- Improve your media and images to load faster

- Update content often as content recency matters.

- Get an SSL certificate

- Improve your page speed

Improve Content

The best way to find content to improve is to look at declining content.

Content may decline if it is seasonal or is implied seasonal.

An SEO audit should focus on a long term plan to address declining content.

You can quickly audit declining content from Google search console.

- In the search console, head to the Performance report

- Select the dates to be the last 6 months and do a comparison to the previous 6 months. This is because Google only stores data for 16 months in the search console.

- Click the pages tab to see all pages

- Sort the table by clicks.

Look for content that is declining.

Declining content usually refers to competing pages having usurped you in rankings.

Start by doing a simple Google search and see why other pages are performing better.

Sometimes, Google also changes the keyword specificity of a particular search term.

It reclassifies certain keywords or topics from commercial intent to informational intent or vice versa.

It might be a good idea to amend the page.

Remember, both the content and the history of the URL are instrumental in rankings.

Don’t simply discard an existing URL with a rich history in favor of a new one.

Improve and update your content.

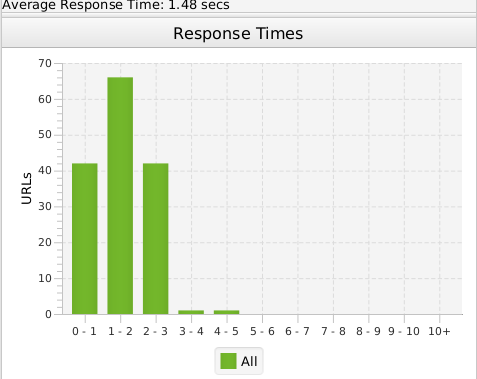

Check Page speed

The best way to check pagespeed of each page on your site is to check the Google Analytics page speed report.

You can get this report by browsing to Google Analytics > Behavior > Site Speed > Page Timings

This report is not available in GA4.

You can also use Screaming Frog to connect page speed insights to get individual score.

Alternatively, you can check the response time report in Screaming Frog.

Use this report to find the pages that load the slowest.

Use pagespeed insights or Lighthouse to find why they load slow and fix the page speed.

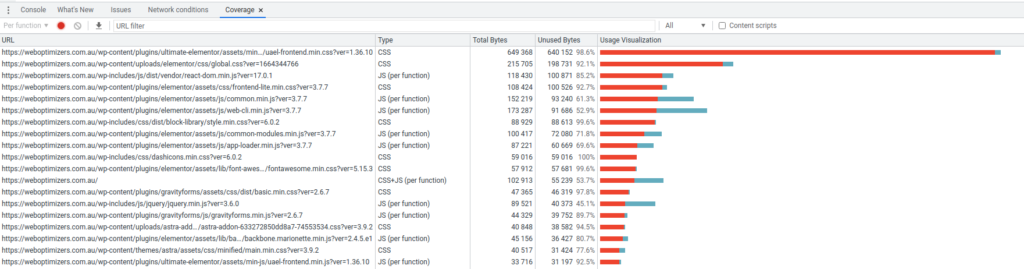

You could also use the chrome dev tools to audit the unused resources.

- Open your webpage in Chrome

- Hit F12 and open devtools

- Ctrl + shift + P

- Type coverage and hit show coverage

- Hit the round grey record button

- The page will draw up the usage visualisation for all the resources used (CSS/JS)

- You can then click on a resource and the editor will highlight between blue/red for what is and isn’t used

Work on optimising the most used CSS / JS files.

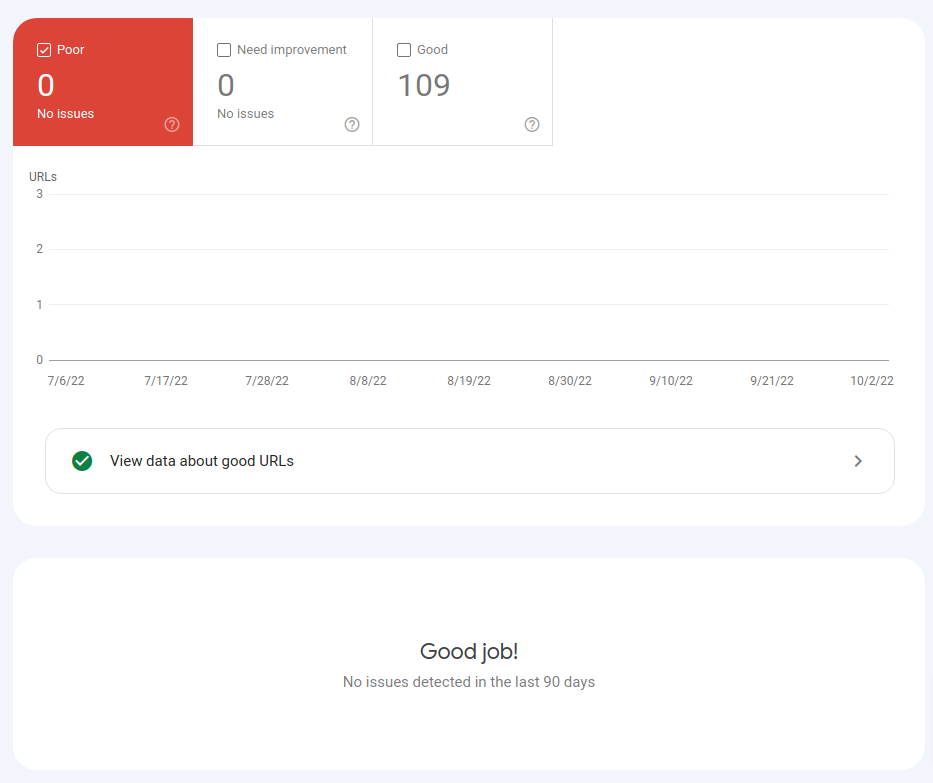

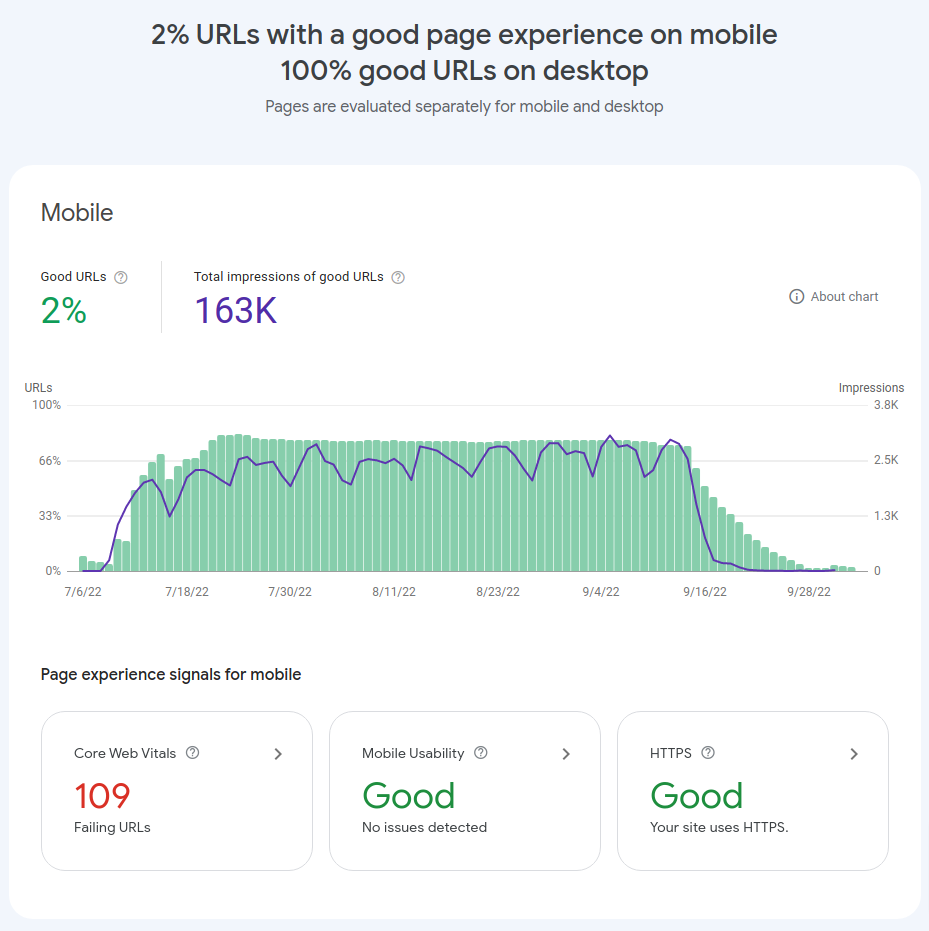

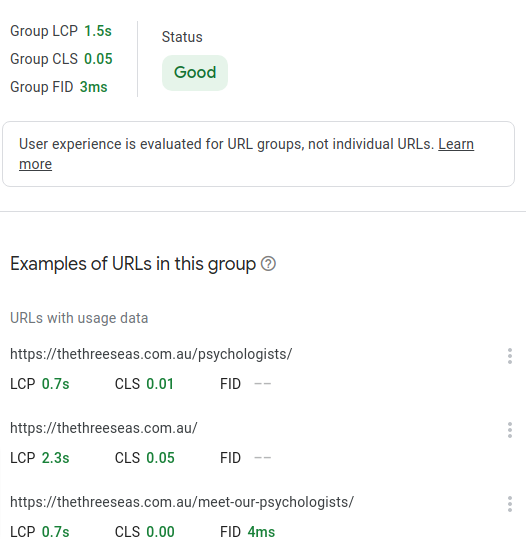

Check Core Web Vitals

Core web vitals are metrics introduced by Google to check how the page performs for user experience.

The core web vitals is a measure of page’s load time, its elements and relative shifts in page stability and its interactivity.

Here is a report for core web vitals from Google search console

You can also look at the page experience report in search console.

A further breakdown of what’s being measured is available by clicking on the link.

It measures

- Largest Contentful Paint (LCP) – measures how fast the page loads. Ideal loading speed is 2.5 seconds or less.

- First Input Delay (FID) – measures interactivity. Ideal value is 100 milliseconds or less.

- Cumulative Layout Shift (CLS) – measures visual stability. Ideal CLS is 0.1 or less. If you have dynamic javascripts or Google ads running on the site, you will have this out of whack.

Audit duplicate content

You can end up with duplicate content in a number of ways. The most common one being different URLs serving the same content.

This usually happens with CMSes like WordPress, Shopify, Magento and even Prestashop.

You can use the noindex directives on specific pages or even entire taxonomies.

The best way to detect duplicate or near duplicate pages is to use Screaming Frog to conduct an audit.

After you have crawled the site, simply head to the sidebar to find duplicate pages.

Screaming Frog detects exact duplicates and near duplicates.

It detects exact duplicates by comparing the MD5 hashes of all pages with each other.

With the near duplicates detection, it uses a 90% match using the minhash algorithm.

Both of these are extremely useful to detect content issues with your site.

If you run an ecommerce website, be careful with both near and exact duplicates. You may have duplicates detected for

- Product pages with attributes

- Category pages accessible as via different URL

- Product pages accessible via page type like single page, grouped product, complex product or bundled product

Redirection (3XX) and Broken Links (4XX)

Broken links or redirects lead to poor user experience.

With that said, the best way to transfer an existing page’s authority to a new page is a 301 redirect.

Use redirects if:

- You have moved the domain or the page and want to pass the authority

- People can access your website through several different versions of the URL see WWW vs non WWW above

- You are merging websites

- You removed a page and instead of a 404 page not found you want them to go to a more relevant page.

You can use permanent redirects if the page has moved permanently. These include 301 , 308, meta refresh, HTTP refresh and javascript location

Use temporary redirects when the redirect may be removed in the future. These include 302, 303, 307, meta refresh and http refresh.

Although, I would recommend avoiding both meta refresh and HTTP refresh.

Check Canonicalization

When you have a single page accessible from multiple URls, Google sees them as duplicates. In the absence of canonical instructions, Google will choose a single URL for all the pages.

Google will also see different URLs with similar content as duplicates. In those cases too, Google will either respect your canonical directive or choose one itself.

Canonicals help deal with crawl budgets.

If you have multiple versions of the same page accessible from different URLs, you want Google to crawl one version regularly.

You would also want Google to ignore the other versions to help Google find the most relevant content faster and often.

You can use a rel=canonical tag in your header to tell Google which URL is the canonical page.

Consider that different language versions are also considered duplicates and thus must be canonicalized.

Use the canonical section of the Screaming Frog audit to check for issues with canonicals.

Self referencing is the method of pointing to the page itself as the canonical version.

It is especially useful because while Google does a great job of finding canonicals, parameters in URLs may be unique to your domain and confusing.

It is always best practice to self reference canonicals.

Google’s John Mueller recently stated : “It’s a great practice to have a self-referencing canonical but it’s not critical.”

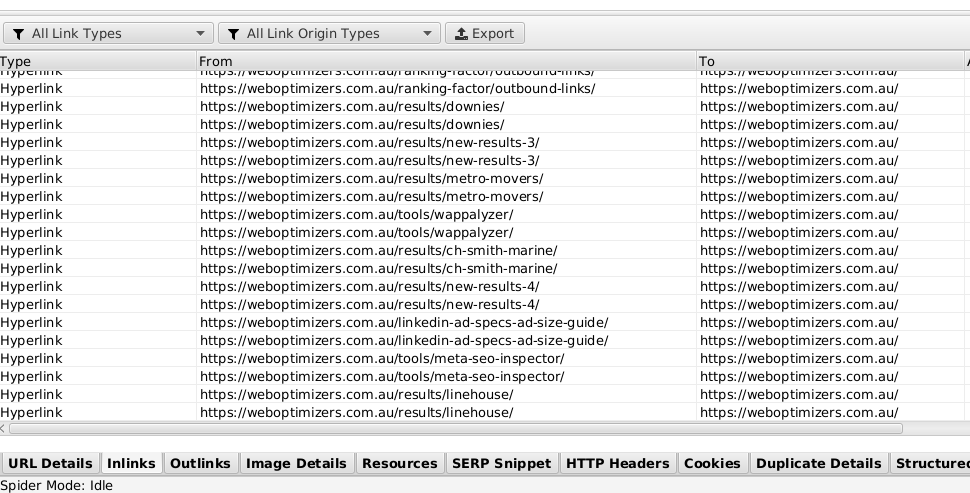

Internal Links

Consider Wikipedia’s rankings on Google.

Wikipedia ranks for most search terms on Google because of the large volume of content and great internal links.

Internal links are a great way for you to tell Google what you think the page is about.

The words that you use to create the link is called an anchor text.

Make sure you use the right anchor text to link to relevant content.

You can do an internal link audit via Screaming Frog.

Choose a URL in Screaming Frog and then choose the Inlinks tab at the bottom to look at all incoming internal links.

Make sure you look at the anchor text section of the Inlinks to see what anchor text you are using to link your content.

With anchor text, be specific.

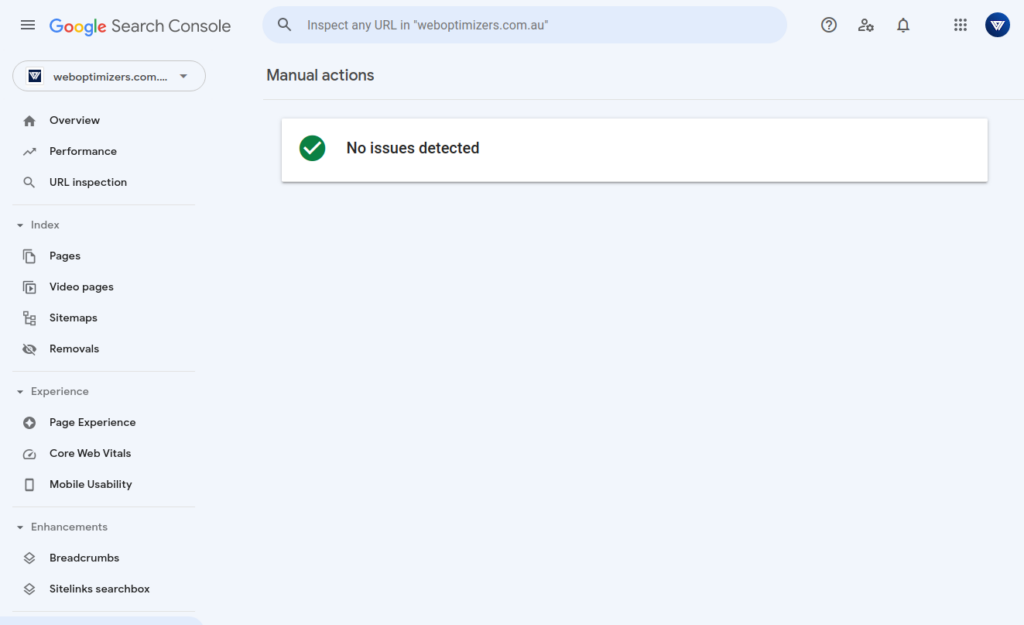

Google Penalties

Sometimes human reviewers at Google decide that your website is not compliant with webmasters guidelines.

They may also decide that the quality of your top ranked page is lower and you are using other methods to push the page higher in search results.

In such cases, they may decide to levy a penalty on your site.

You are unlikely to have a manual penalty unless you engage in black hat SEO or spamming the SERPs.

You can check if you have been hit with a manual penalty by simply going to the search console and looking at the manual actions tab.