In a recent discussion on LinkedIn, Google’s Gary Illyes suggested that you should really disallow crawling action URLs.

For context, action URLs are those URLs that are intended to perform action like adding a product to wishlist, comparision or adding them to cart.

A typical example of such a URL would be

https://example․com/product/scented-candle-v1?add_to_cart

and

https://example․com/product/scented-candle-v1?add_to_wishlist

A common problem amongst eCommerce sites is wasted crawl budget or too much crawling. Gary points out that a common complaint amongst webmasters is that Google is crawling too much. This also leads to wasted bandwidth for the site being crawled.

He suggests that when looking at what Google is crawling, way too often it could be action URLs. These action URLs are usually useless for crawlers and/or your users. These URLs are not intended to rank either.

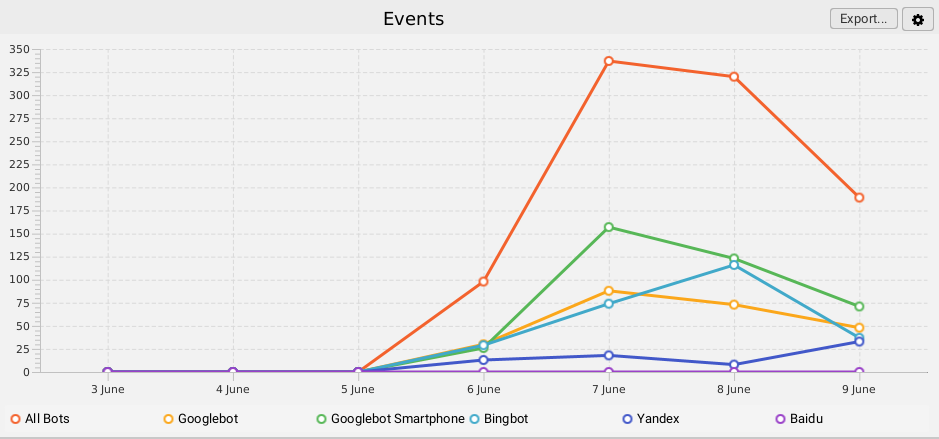

You can verify what Google is crawling using a log analyzer tool like the Screaming Frog Log Analyzer.

If you download access logs from your server, you can segregate crawled URLs using the analyzer. Here is an example.

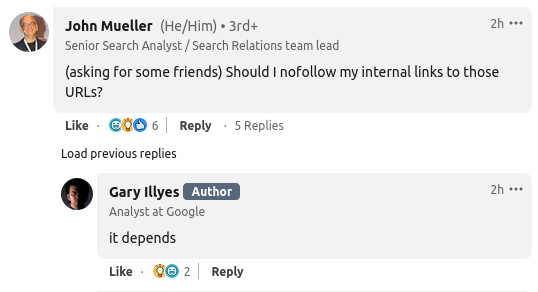

John Mueller, senior search analyst, followed up with whether one should nofollow internal links to those URLs. While Gary responds to it depends. Since these URLs are not intended for search engines to rank or carry any significant user value, it may be safe to nofollow them to avoid having Google waste it’s time or crawl budget on such URLs

Gary recommends that you should probably add a disallow rule for these action URLs in your robots.txt file. He notes that converting them to HTTP POST method also works, though many crawlers can and will make POST requests.

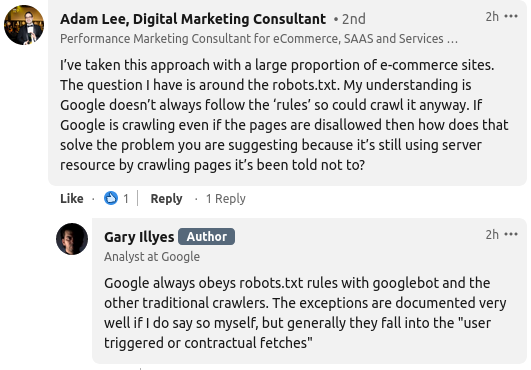

Another user points out that while he has taken this approach before, Google doesn’t always obey the robots.txt file.

According to Gary, Googlebot does obey the robots.txt file except when the crawl is user triggered or contractual fetch.

Keep in mind that if you use WordPress or Shopify CMS for your sites, you may unknowingly be triggering the “user triggered” crawl that Gary says is an exception.